AI for Science and Design of Ecosystems

Insights into how neural networks are revolutionizing physics, chemistry, biology, ecology, and the way we design environments and ecosystems everywhere.

Maximizing Ecosystem Services with Artificial Intelligence

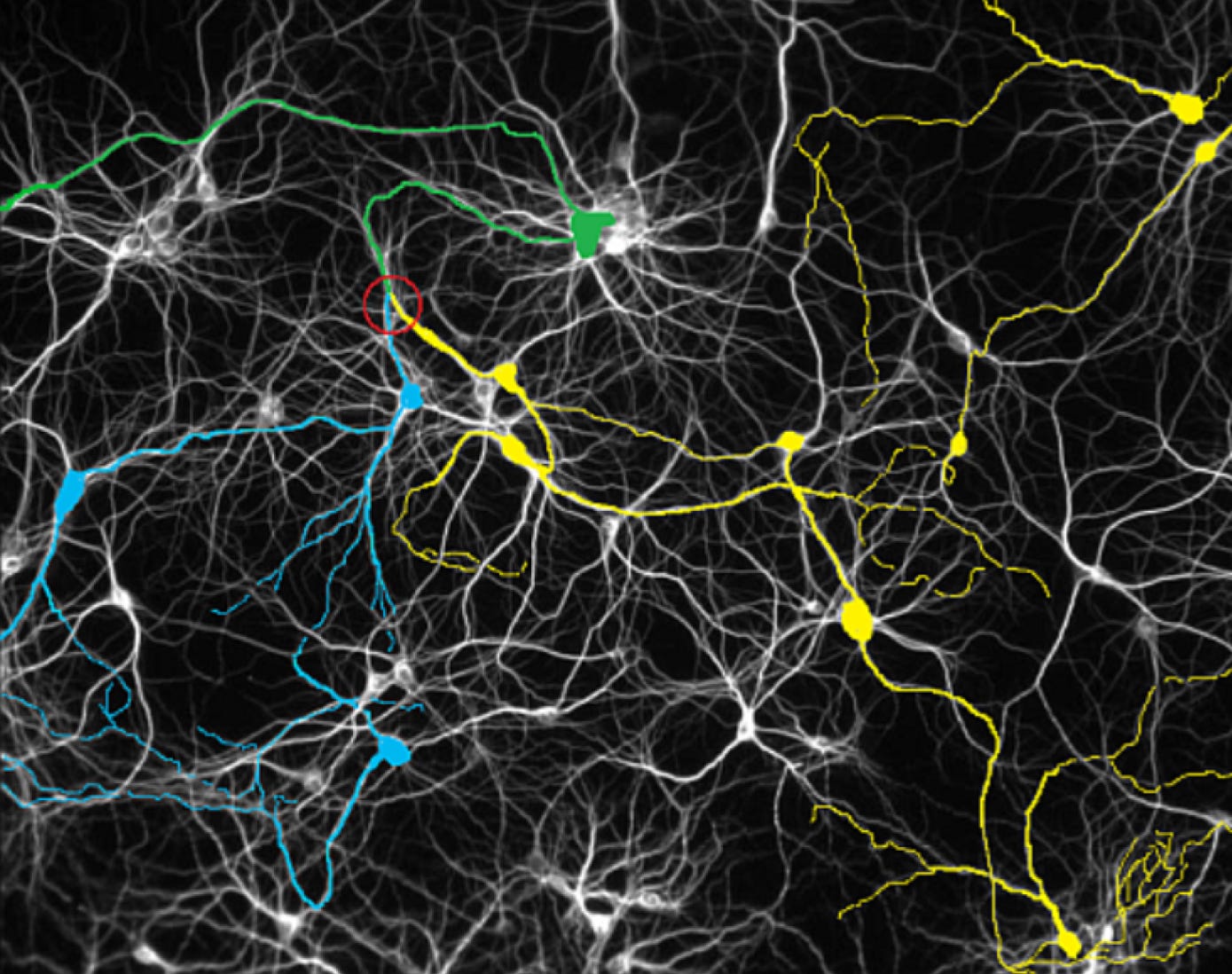

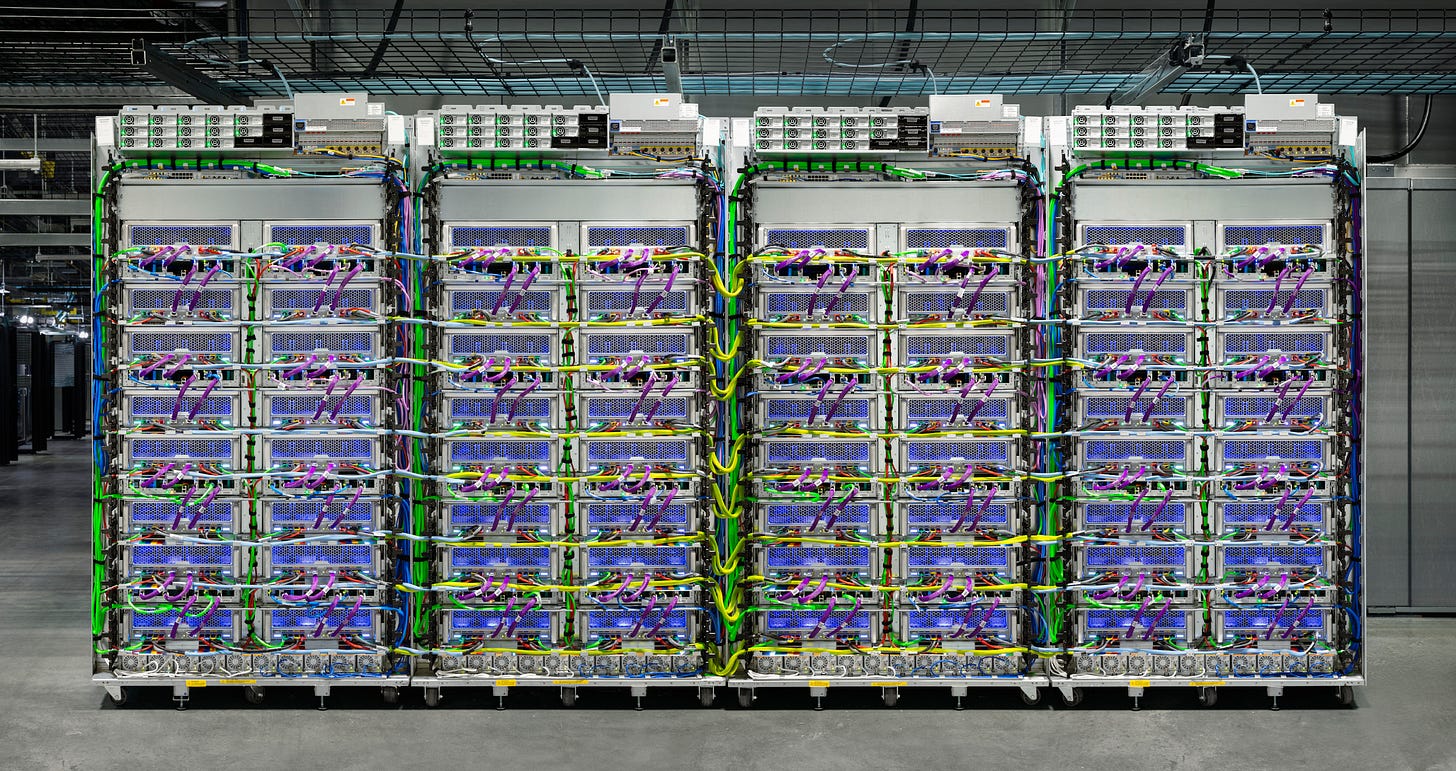

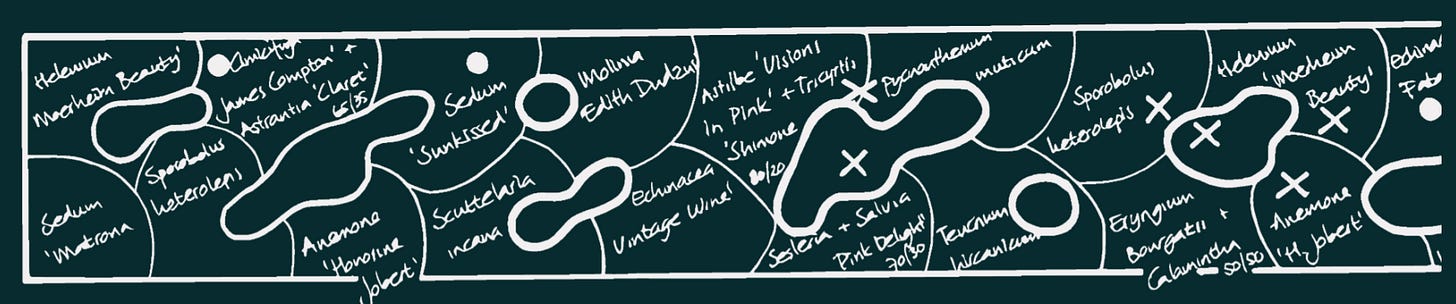

Ecodash is developing AI for the design of beautiful, sustainable, and enriching ecosystems. Picture (above) an exquisite planting designed by Piet Oudolf, based on a geospatial planting diagram (below). Oudolf’s living architecture is an example of the type of extraordinary nature that Ecodash is developing AI tools to help generate.

I believe the world needs a lot more of these kinds of gardens — over 100 million.

Landscape architects face a biodiversity crisis and urgent need for climate resilience, which these types of gardens intelligently respond to. Ecodash is developing AI so that anyone can easily create the most flourishing, resilient, and abundantly resourceful plantings, for any local environment, given any functional design objectives. I believe this will be a priceless tool for the planet.

Let’s Review the State of AI and Science

Meanwhile, I’d like to address the broader state-of-the-art in AI and science. I have some wonderful stories to share about how my own career in AI — and what’s possible in the world today — has been empowered by this year’s Nobel Prize winners.

2024 Nobel Prizes Awarded to AI Pioneers

Geoffrey Hinton and Demis Hassabis won Nobel Prizes in Physics and Chemistry for their pioneering work in AI. I’ve been deeply inspired by them, especially Hinton.

“Solving Intelligence” for Chemistry

I’ll quickly address Hassabis before focusing on Hinton. Hassabis got the Chemistry prize for the creation of AlphaFold: a neural network model of biochemistry. It can predict 200 million protein structures. AlphaFold’s Nature publication has 16,000 academic citations and 1.7 million views. Key takeaway: AI is learning the physical nature of chemistry and biology.

Neural Networks Inspired by the Physics of the Brain

Geoffrey Hinton won the Nobel Prize in Physics for brain-inspired neural networks.

I love the elegant scientific explanation for the prize:

“With artificial neural networks, the boundaries of physics are extended

to host phenomena of life as well as computation.”

Hinton and leading researchers (e.g. Yann LeCun, Yoshua Bengio, and Andrew Ng) ended an “AI winter” and changed the world through physics-inspired models.

Beyond just the brain-inspired design of neural networks, a key takeaway is that neural networks are proving increasingly effective at modeling the physical world.

Researching with a Protégé of Geoffrey Hinton’s

In 2012, I began to research neural networks led by neuroscientist Randall O’Reilly. O’Reilly’s 1996 PhD thesis mentions “Hinton” 78 times and deeply thanks Hinton.

“Thanks to Geoffrey Hinton, for providing so much of the framework

on which these ideas have been built.”

When I started researching with O’Reilly, I was told that I should try to understand all of the work by Hinton up to that point.

No problem!

Physics to the Rescue

I earned a degree in Physics in 2010, and had some skills in computational physics modeling, including for the NASA Kepler Mission, thanks to a very smart professor.

“The most sophisticated physics models are composed of simple and provably correct parts”.

Biophysics of Brain Function

I was led to study neural networks from my curiosity in the biophysics of brains. Eventually, I came to have a basic understanding of “synaptic dynamics” for learning.

Diving into the Mind of Geoffrey Hinton

After spending several months learning all about computational neuroscience, and reading countless publications, I could see key principles to the research led by Hinton in “deep neural networks”:

Data-driven modeling of brain physics should inspire and constrain AI models

See e.g. Olshausen et al.’s 1996 paper in Nature for a model of the visual cortex that was highly influential in inspiring sparsity and feature learning

Biological brains should not be the only constraint in the design of AI models

My lab full of neuroscientists said of Hinton’s Boltzmann Machines: “The brain is definitely not doing that.” My response: “Well, it works.”

Hinton et al. had a gold standard for AI modeling: reconstructive accuracy.

Statistically, models should be capable of generating new data which is indistinguishable (quantitatively) from real data

My Master of Science thesis from 2013 was greatly inspired by Hinton, citing 8 of his publications on the statistical nature of intelligence, physics, and neural networks.

Nobel Prize interviewer at 3am:

“Would you say you were a computer scientist or… a physicist trying to understand biology…?”

Geoffrey Hinton:

“…I am someone who doesn’t really know what field he’s in… but in my attempts to understand how the brain works, I’ve helped to create a technology that works surprisingly well.”

Carrying the Torch of Geoffrey Hinton

Today, I still rely on Hinton's gold standard for AI modeling: models that can simulate new data in ways that are statistically indistinguishable from real data.

For example, GPT-4 can pass the Turing Test because it was trained to reconstruct raw language, word for word, with masked prediction.

Foundational models — which truly stand to revolutionize industries and society — are mostly about “unsupervised learning” a lot like the deep autoencoders of Hinton et al.

Mathematically, Hinton et al. provided computational tools to measure and minimize differences between high-dimensional statistical distributions.

What’s beautiful is just how simple the ultimate objective really is: simulation.

If you can simulate new scenarios from a raw dataset with a neural network, it turns out problems like classification, understanding, prediction, and so on become trivial.

By the way, perhaps it should not be surprising that physics provides us with exactly this capability: simulating new scenarios based on underlying equations.

For example, by reducing observed planetary data to equations of gravity, NASA physicists can successfully predict where to send their next big exploration mission.

Modern Tools for Discovering the Equations of Reality

Both physics and machine learning seek to discover predictive equations of reality. These fields are deeply allied. Physicists have always employed cutting-edge mathematical tools. Adopting machine learning tools is the way of physics in 2024.

Physics is the art of crafting predictive equations of reality from data

The essence of physics is not in studying the history of how past equations were formed — unfortunately, that’s how too many physics courses are taught.

The best physicists delve into large datasets to uncover the hidden physical laws. From patterns in data — and deep insight — physicists craft predictive equations.

Machine learning is a tool for automatically discovering predictive equations from data

It turns out neural networks are the best friend of physicists (and scientific modelers). These tools automatically discover the structures of equations, directly from raw data.

Imagine a neural network discovering E=mc² — and every other physics equation? Input sensor datasets, output equations discovered by machines.

That's the future of physics: infinitely scalable Einstein-like neural networks.

Enjoyed the read. John Moran's publishing of the "brief" domain list will be an interesting guide as I walk through our "silent woods."